Thoughts on Visual Programming

It's a shame we never moved past monospaced ASCII text.

My first programming experience, back when I was single digits in age, was a demo CD of Delphi 1.0, a so called “RAD” tool. Delphi (similarly to Visual Basic) allowed you to drag and drop components like buttons and lists into a window and then link various events like clicks to event handling methods. The methods themselves had to be written as monospaced ASCII text (Object Pascal, for the unaware).

Later I played around with FrontPage and Dreamweaver, which enabled me to design websites visually. Very similar in spirit to Delphi, only targeting HTML instead. Events could be mapped to Javascript functions, which had to be written as monospaced ASCII text.

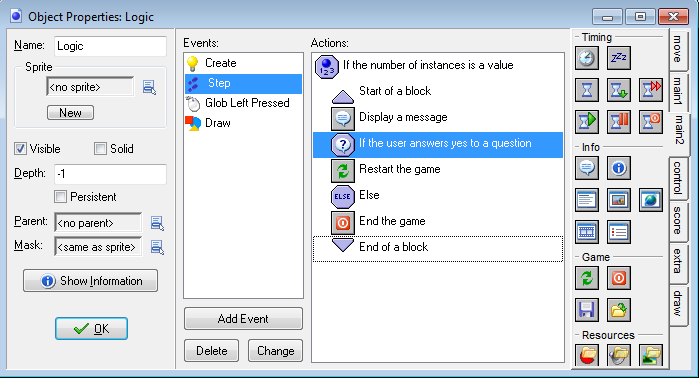

Next came Game Maker, the pre-Studio version developed by Mark Overmars. It went even further on the visual aspects. It included an image editor for sprites and backgrounds, a tilemap editor, and a visual editor for “objects”, which included a drag-and-drop interface for programming the object’s behavior1. Actual visual coding! But the only way to have any sort of abstraction was to create a “Script” and write monospaced ASCII text (which sadly didn’t map 1-to-1 to the drag-and-drop interface).

From what I can tell, over the years we’ve only moved backwards towards monospaced ASCII text. Even things that should be visually edited like GUIs are now mostly written as monospaced text, maybe with “live preview” to help out. Computers have made absolutely incomprehensible leaps in performance and capability since the 1940s, but we still program them like we did in the 1980s! Even back in the day all that visual editors were really doing was write monospaced ASCII text under the covers.

The standard interface to most programming language compilers is an emulator of a device from the late 1800s. The most popular editor these days, Visual Studio Code, is downright alergic to toolbars. We use tools whose only job is to move ASCII characters around to avoid bike-shedding about where to place them on a grid, even though the specific positioning of a curly bracket matters very little as long as indentation is done properly. Our IDEs have to repeatedly parse monospaced ASCII text to develop an index with all the types and functions in the project, something the compiler also has to do all over again when invoked. Its silly.

How did we end up here?

Asking a Robot to Bang Rocks

As a little side-note, I find it absolutely hilarious that the current “state-of-the-art” in software development (according to some people at least) is to chat with the closest thing to artificial intelligence we’ve built (so far), and to ask it to spit out monospace ASCII text and run commands in a teletype emulator. Regardless of how effective they are at the task, I find the whole setup hilarious, like a caveman asking a robot to bang rocks in order to start a fire. LLMs are only going to exacerbate this local optimum we’re currently stuck in.

The Advantages of ASCII Text

In order to understand why Visual Programming doesn’t seem to catch on outside of some very specific niches, we have to first understand why ASCII text continues (and likely will continue) to be the dominant programmer-machine interface.

The platform APIs are provided (ultimately) as ASCII text. If I want to program an application targeting any of the current mainstream platforms, an API described in a language whose interface is ASCII text is going to be involved at some point. When the limitations of the visual interface are hit, and the programmer has to “drop down” to ASCII text, it’s almost always very painful.

The languages that we use do not lend themselves well to visual programming (see: modern Game Maker’s awful node-based UI). Working with structured programming in the form of a flow-chart or node-graph results in a massive loss of information density and development speed.

Directly manipulating the AST of a programming language, as in Jetbrains MPS, is worse in nearly every way to working with ASCII text. All the disadvantages with almost no advantages. The loose nature of ASCII text is a feature, not a bug. The ability to copy-paste and tweak some characters or “find-and-replace” a bunch of times to refactor some piece of code (with “broken” intermediate steps), arrives at the destination much faster than having to follow a limited set of always valid but otherwise rigid transformations.

We have lots of tools that work with ASCII text, most available for free or very cheaply. It is a lot easier to develop tools that work with ASCII text than fancy visual editors.

Most Visual Programming tools that do exist are designed as low-barrier-to-entry solutions for non-programmers, rather than tools meant to increase the productivity of expert programmers. The so called “no-code” tools targeted at large businesses are all vendor-lock-in scams (IMO).

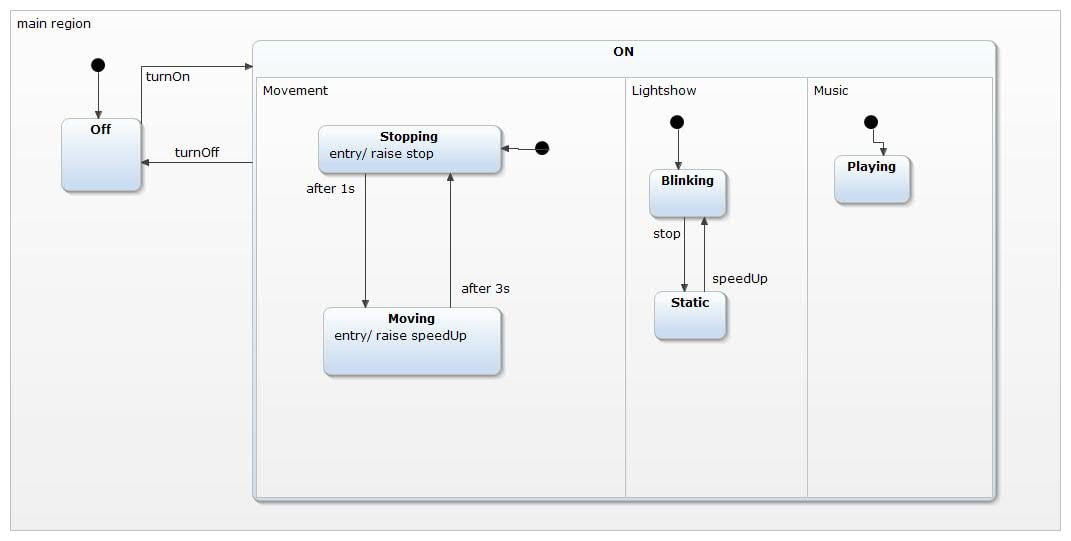

It also doesn’t help that programmers have been burned very badly with the one major industry attempt at professional “visual programming” (or architecting rather): UML. UML class diagrams map very poorly to actual code, something everyone that had to deal with UML learnt very quickly and very painfully. Harel Statecharts (also part of UML) are actually pretty great, but they weren’t the focus in development circles2.

If programming is to move away from ASCII text, then Visual Programmings needs to shift focus from being low-barrier-to-entry (even if that’s a nice property to have), to being a productivity boost for experts. It must surpass, in terms of development speed, all the shortcuts ASCII text allows, which these days includes having a robot do some of the work for you.

Where Visual Programming Succeeded

While most mainstream software development activities have completely abandoned visual programming, including visually editing of parts of the program (like the UI) or even having a freaking build button in the IDE, that’s not true of every domain.

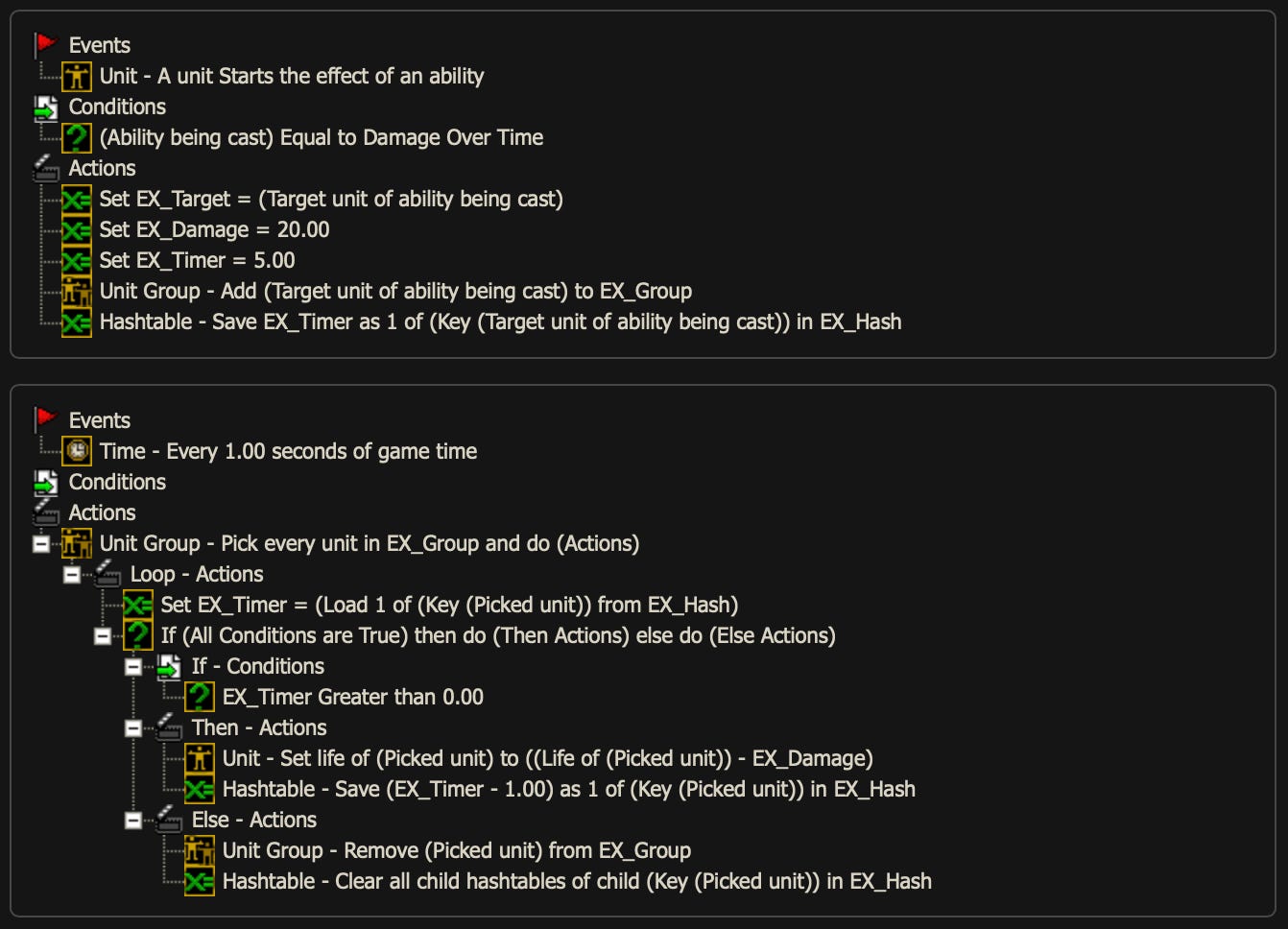

Tools like Construct 3 and Game Maker are pretty popular despite their UX issues3. Godot and Unity don’t have official visual programming toolkits, but they are otherwise highly visual. Unreal Engine has Blueprints which is a low-barrier-to-entry for non-programmers (e.g., level designers) solution. RPG Maker, as long as you’re making a bog-standard turn-based 2D JRPG, requires no “programming” in the usual sense. I also have a soft spot for the extremely powerful Warcraft 3 level editor4.

So at least for game development some degree of Visual Programming is still around. But it is very high level and with very poor abstraction capabilities. It’s not really a serious visual alternative to programming in monospaced ASCII text.

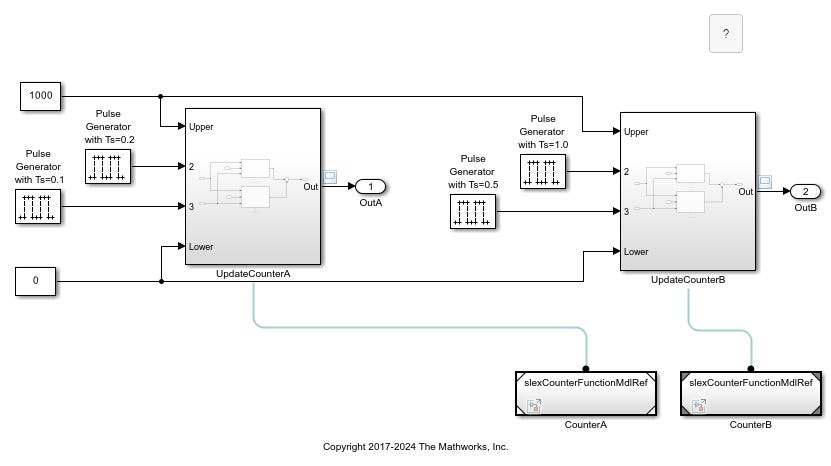

Non-software engineering fares better. Tools like Simulink and Amesim are almost entirely geared towards visual modeling. Simulink has MATLAB under the hood but Amesim doesn’t have any actual textual language as a base. The Modelica language has a textual representation, but the tools all provide graphical modeling.

These tools, unlike the game development ones, have proper visual-abstraction capabilities, and can be extremely expensive (some 10s of thousands of dollars).

Why have these tools succeeded where others have failed? I believe the main reason is what I like to call immediacy. It’s how close the level you’re working at is to the level you’re thinking in, how quickly you can get feedback, and how easily you can reflect it back onto the code.

This is related to, but not equivalent to, low-barrier-to-entry. Software like Simulink and Amesim is not low-barrier-to-entry, these tools need extensive training to use effectively, and their core audience is made up of experienced professionals.

But they have very high immediacy. I can drag and drop a variable from a port of a block in an Amesim model and instantly get a plot showing how its value evolved over time in a simulation. There is an absolutely monstrous library of ready to use components (6500+), at different levels of abstraction, with familiar looking icons, that can be used to build more complex systems. I don’t mean packages that can be installed, they’re all part of the “standard library”. It also includes many domain-specific UIs for specific problem domains with custom visualizations.

Some customers report an 80% reduction in development time. That’s easily worth the 10s of thousands of dollars a single seat license costs. Is there any visual programming platform for software development that can make a similar claim? Yes, RPG Maker, if you’re making a turn-based 2D JRPG and don’t mind its defaults.

Not Drawing, Seeing!

For programming to escape its monospaced ASCII shackles, focus must be placed on what actually helps, and it’s not drawing lines and rectangles. We’ll talk about node-graphs in a bit because they enable certain things, but rectangles and polylines are not what changes the game.

The really helpful part of Visual Programming is not the “drawing”, it’s the “seeing”5. Even in the monospaced ASCII world we make use of visualization: Indentation and syntax highlighting are visual aids to help our brains map ASCII text to a mental AST more quickly. Debuggers let us see how variables change over time as we step through the code. In VSCode I can hover over, e.g., a Rust identifier and get useful information like its documentation, type, size, etc. or jump to its declaration and implementations.

These are all helpful forms of visualization, but also very limited. We can (and should) take this further, much further.

Visualized Programming

One of my favorite things awhile back was the Elm Reactor. It was a time traveling debugger that took advantage of Elm’s event driven architecture and functional purity to allow “rolling back time” (with a literal slider) on a running application, unlike post-mortem time traveling debuggers like rr. After rolling back time you could continue to interact with the application and “change the future”. You could even change the code to some extent and keep going! It was really incredible to me.

Creating something like the Elm Reactor is only possible if the architecture of the application allows for it. Elm imposed an architecture, and that made the Reactor work for every application developed in Elm (until they broke it but that’s another story). General purpose time traveling debuggers cannot compete with something like Elm Reactor in performance or UX, because they have to support all sorts of code.

Architecture and semantics have many second order effects, and that is also where “nodes” come in. But representing a function’s body as a flowchart is almost completely useless6. Most of the time a function’s control flow is pretty obvious, and your brain can already “see” everything it needs just fine. A drawing is only useful if it helps you see something that you otherwise couldn’t, or at least not as quickly.

At the level of an individual function, ASCII text is a very good interface. Smalltalk understands this for example. You’re editing a live program when you program in Smalltalk: there are no source files, there is no ASCII text. You directly manipulate classes and methods that exist in memory as bytecode, but they’re presented to you, and edited, as monospaced ASCII text. At that level text works just fine.

In Enso it’s possible to visualize the data flowing through individual nodes as it goes through them and changes over time. It has a textual language that is isomorphic (except for node positioning) to the visual programming side. You can edit the code either visually or as text, it is the same language. Text and graphics are not at odds.

Game Maker could have worked like that too, drag-and-drop and text being two ways to edit the same language, specially in the older list-oriented design. But in Game Maker’s case it would mostly be helpful to newbies. The drag-and-drop UI provides no advantages to an expert. Enso does, because of the visualization. This is what I want you to keep in mind: seeing, not drawing.

The Power of Nodes

Where nodes provide value is at a higher level. In order to provide value they must let you “see” something you otherwise would struggle to know, specifically the application’s inner workings. I don’t mean visualizing a class or package diagram, that’s not really helpful unless you’re completely unfamiliar with the codebase.

I mean the drawings must reflect the actual logic of the application, they must help you see what it does. For example, a Harel Statechart (a hierarchical form of State Machine) lets you make application states and their transitions explicit, and to see the whole thing in a single screenful. You can then select a particular state and “dig down” to see the internal states it can be in, and so on and so forth.

Harel Statecharts also allow for concurrent state machines, which can send events to each other, so you can program complex concurrent applications with them. If you’ve already been converted to the Church of Coroutines, it’s that on steroids.

But I’m not suggesting we should all be programming in hierarchical state machines. There will be many applications where that is not a very natural way of working. The point is thinking in terms of “seeing”. What helps programmers “see” what they need to see? The UX to edit the application’s logic should reflect this, such that mapping the results of what was “seen” back to the code is as easy as possible.

In a game engine, that could be a live editor experience, where you can pause the game, click on an enemy character, and then tweak its AI script. Immediacy! The script could be a state machine, a flow chart, an event list, or plain ASCII text. The choice is nearly irrelevant in the face of the live editor experience.

Thinking in those terms, how to maximize immediacy, is what matters. How quickly can the programmer find out what they need to find out? How quickly can they map their findings back to the code? Optimizing for that is what Visual Programming should be about.

The visual programming capabilities of Game Maker have only gotten worse with time, with the current version having replaced the simple list-oriented drag-and-drop interface with an extremely clunky node-based editor that is the complete opposite of “information dense”. The editor also loves to crash on macOS for whatever reason. But the UI looks like it came out of a sci-fi movie now, so I guess it’s all worth it?

They’ve found great success in model-based design. Stateflow from MathWorks (makers of MATLAB) is a great example. Extremely powerful.

For Construct 3 the main issue is that it is basically impossible to abstract low-level actions into high-level actions in its visual programming language. Game Maker has the same issue but makes it worse by using a low-information density node-graph UI these days.

We have its capabilities to blame for DoTA and, in turn, League of Legends and the entire MOBA genre. This despite Warcraft 3 being a real-time strategy game with minor RPG elements. That’s how powerful the trigger editor was.

Mr. d7samurai likes to call this Visualized Programming instead of Visual Programming because everyone immediately thinks of node-graphs when you say visual and tunes out. The visualization is what is important, not the drawings, but do note that nodes are also a form of visualization.

Except for newbies I guess? Or some insanely convoluted function you shouldn’t really be writing that way to begin with.

Have you looked into the Hazel editor? It has the good things of ascii and the good things about type-safe editing of ASTs. https://hazel.org

Having worked on a couple of "no-code" systems over the years, I'll tell you that in general visual programming systems only work if you limit the domain severely. You talk about Game Maker and say that it only allows 2D RPGs. Yep, exactly. That's the only way it works. Visual state charts are great, but they only work for state charts, not general programs, and to be honest they only scale so far. Editing large state charts is annoying. I think it's instructive to look at the hardware side of the house because they've been dealing with this split between graphics and text for a long time. Things like schematic capture are graphical. The job there is to select chips from a library and "wire them up" virtually. That's an inherently graphical sort of task and the user benefits from seeing the visual representation. But when you go to design a complex CPU, for instance, things suddenly go to Verilog and ASCII text. Why? Because I'm effectively programming and my task is to specify very complex behavior. Could I do that graphically? Yes, but it's cumbersome. In fact, that's the issue with most visual programming systems--they are cumbersome. The user cannot see the whole program at once. Changing behaviors requires clicking on this, reading some text (ironically), clicking on this other thing, editing a bit of text, etc. Click, edit, click, edit. That sucks. Better to just edit, edit, edit in a text editor. That's one reason that schematic capture being visual works better. It's more click, click, click, edit, click, click, click, edit. Yes, every now and then you need to name a bus or a component, but otherwise you're pretty much just selecting, moving, and connecting things, all done with the mouse. Anyway, I think the answer to why programming is still mostly ASCII text-based is that ASCII-text is the best way to do most programming. Where a graphical representation of part of a program makes a lot of sense (e.g., state charts), then visual tools can be used to generate code for linking with other parts of the program written in ASCII text. In a certain sense, ASCII text sucks, except for all the other things we've tried which suck worse.